This line of research focuses on examining neurocognitive correaltes of egocentric spatial processing. Neuroscience research has shown the dissociation between the two types of spatial transformations: allocentric spatial transformations, which involve an object-to-object representational system and encode information about the location of one object or its parts with respect to other objects, versus egocentric perspective transformations that involve a self-to-object representational system.

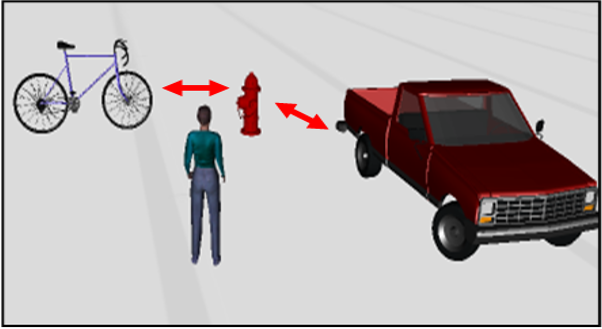

Allocentric system (object-to-object):

Allocentric system (object-to-object):

Codes information about the location of one object or its parts with respect to other objects.

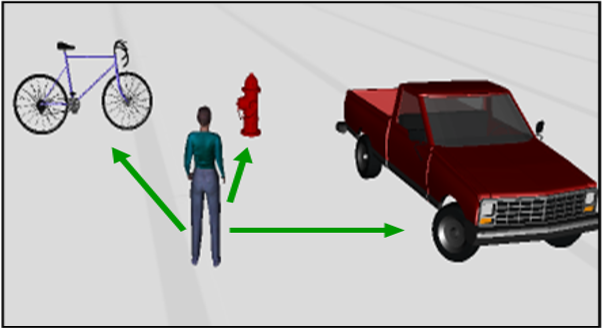

Egocentric (self-to-object, viewer-centered):

Egocentric (self-to-object, viewer-centered):

Codes information about the location of an object with respect to the body axis of the self (left-right, front-back, up-down). It is closely tied to visually-guided motor actions and sensory-motor systems.

Allocentric and egocentric spatial abilities are sometime referred to as “small-scale” and “large-scale” spatial abilities in the literature due to their intertwined relation with small-scale and large-scale environmental spaces or representations. The dissociation between allocentric and egocentric spatial abilities is of great importance because research indicates the involvement of different neural and cognitive networks in allocentric and egocentric spatial processing, so that a person with high allocentric spatial ability might not be necessarily of high egocentric spatial ability and vice versa. Importantly, allocentric and egocentric spatial abilities are responsible for success in different fields and show different relationships to real-world performance. The encoding of visual-spatial stimuli in relation to egocentric spatial frames of reference has been shown to be critical for successful performance in many real-world tasks as long as one is required to imagine and manipulate objects from their own body-centered perspectives and/or imagine how these objects will look like from a different perspective. Indeed, the ability to perform egocentric spatial transformations has been found to predict performance on a variety of wayfinding and spatial orientation tasks, medical surgery (in particular robotic surgery), dentistry, and telerobotics. Furthermore, it is namely egocentric spatial ability was found to be an important predictor of drilling performance of aerospace technicians and is important for firefighters’ performance during training operations and on the fireground.

Allocentric and egocentric spatial abilities are sometime referred to as “small-scale” and “large-scale” spatial abilities in the literature due to their intertwined relation with small-scale and large-scale environmental spaces or representations. The dissociation between allocentric and egocentric spatial abilities is of great importance because research indicates the involvement of different neural and cognitive networks in allocentric and egocentric spatial processing, so that a person with high allocentric spatial ability might not be necessarily of high egocentric spatial ability and vice versa. Importantly, allocentric and egocentric spatial abilities are responsible for success in different fields and show different relationships to real-world performance. The encoding of visual-spatial stimuli in relation to egocentric spatial frames of reference has been shown to be critical for successful performance in many real-world tasks as long as one is required to imagine and manipulate objects from their own body-centered perspectives and/or imagine how these objects will look like from a different perspective. Indeed, the ability to perform egocentric spatial transformations has been found to predict performance on a variety of wayfinding and spatial orientation tasks, medical surgery (in particular robotic surgery), dentistry, and telerobotics. Furthermore, it is namely egocentric spatial ability was found to be an important predictor of drilling performance of aerospace technicians and is important for firefighters’ performance during training operations and on the fireground.

In contrast, allocentric manipulation of objects or arrays of objects (e.g., mental rotation of cubes or other geometrical figures) involves imagining movement relative to an object-based frame of reference, which specifies the location of one object (or parts) with respect to other objects. Allocentric spatial ability was found not to be a reliable predictor of wayfinding performance in real environment but an important predictor in achievement in a variety of STEM disciplines, such as mathematics, graph and diagram interpretation, chemistry and physics. Yet, most of the existing spatial assessment tools measure allocentric but not egocentric spatial ability. Allocentric spatial ability tests are currently used to predict performance in a variety of domains, including the domains requiring egocentric rather than allocentric processing. For example, PAT (Perceptual Ability Test), which is used for selection of students to dental schools in many countries, comprises mostly items assessing allocentric spatial ability. It is, however, egocentric spatial ability that is required for success in dental performance (Kozhevnikov et al., 2013). Similarly, while allocentric spatial tests, such as mental rotation, are still used to predict navigational performance, it is egocentric spatial ability which is important the most in navigating in real-world large-scale space (Kozhevnikov et al., 2006). It is not surprising then that allocentric spatial ability tests such as mental rotation or PAT have only weak predictive validity, and thus the development of egocentric spatial ability tests is necessary to improve the process of personnel selection.

In contrast, allocentric manipulation of objects or arrays of objects (e.g., mental rotation of cubes or other geometrical figures) involves imagining movement relative to an object-based frame of reference, which specifies the location of one object (or parts) with respect to other objects. Allocentric spatial ability was found not to be a reliable predictor of wayfinding performance in real environment but an important predictor in achievement in a variety of STEM disciplines, such as mathematics, graph and diagram interpretation, chemistry and physics. Yet, most of the existing spatial assessment tools measure allocentric but not egocentric spatial ability. Allocentric spatial ability tests are currently used to predict performance in a variety of domains, including the domains requiring egocentric rather than allocentric processing. For example, PAT (Perceptual Ability Test), which is used for selection of students to dental schools in many countries, comprises mostly items assessing allocentric spatial ability. It is, however, egocentric spatial ability that is required for success in dental performance (Kozhevnikov et al., 2013). Similarly, while allocentric spatial tests, such as mental rotation, are still used to predict navigational performance, it is egocentric spatial ability which is important the most in navigating in real-world large-scale space (Kozhevnikov et al., 2006). It is not surprising then that allocentric spatial ability tests such as mental rotation or PAT have only weak predictive validity, and thus the development of egocentric spatial ability tests is necessary to improve the process of personnel selection.

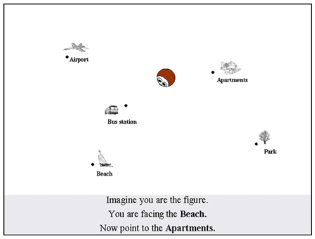

Although new paper-and-pencil (Kozhevnikov & Hegarty 2001) and computerized (Kozhevnikov et al., 2006) Perspective-Taking assessments have been developed recently, and in fact are able to measure egocentric spatial ability to some extent (Kozhevnikov & Hegarty, 2001), they are problematic due to their “small-scale” nature, eliciting the use of allocentric instead of egocentric frame of reference and leading to the situation in which these tests are often solved by mentally rotating the stimulus rather than by reorienting one’s perspective.

Although new paper-and-pencil (Kozhevnikov & Hegarty 2001) and computerized (Kozhevnikov et al., 2006) Perspective-Taking assessments have been developed recently, and in fact are able to measure egocentric spatial ability to some extent (Kozhevnikov & Hegarty, 2001), they are problematic due to their “small-scale” nature, eliciting the use of allocentric instead of egocentric frame of reference and leading to the situation in which these tests are often solved by mentally rotating the stimulus rather than by reorienting one’s perspective.

My lab currently designs and validates an innovative diagnostic tool to assess egocentric spatial ability - 3D Immersive Virtual Reality-based Perspective-Taking Ability test (3D VR-PTA). Our hypothesis is that an immersive virtual reality PTA (3D VR-PTA) task could be a better measure of egocentric spatial ability than currently available 2D PTA, and, as a result, performance on immersive 3D VR-PTA task might be more strongly related to egocentric ability as compared to desktop 2D PTA task. We expect that immersive 3D virtual reality environment might be particular efficient for assessment of large-scale egocentric spatial ability due to its immersivity (the user is immersed in the scene “looking inside out”. rather than viewing it exocentrically from the outside, and such characteristics as a larger field of view in comparison with a traditional computer screen.

My lab currently designs and validates an innovative diagnostic tool to assess egocentric spatial ability - 3D Immersive Virtual Reality-based Perspective-Taking Ability test (3D VR-PTA). Our hypothesis is that an immersive virtual reality PTA (3D VR-PTA) task could be a better measure of egocentric spatial ability than currently available 2D PTA, and, as a result, performance on immersive 3D VR-PTA task might be more strongly related to egocentric ability as compared to desktop 2D PTA task. We expect that immersive 3D virtual reality environment might be particular efficient for assessment of large-scale egocentric spatial ability due to its immersivity (the user is immersed in the scene “looking inside out”. rather than viewing it exocentrically from the outside, and such characteristics as a larger field of view in comparison with a traditional computer screen.

Relevant research articles: